Our openstack controller integration is designed to collect information from all compute nodes and the servers running it. All metrics collected from these openstack services are ingested into your New Relic accounts insights (NRDB) for analysis, visualization and alerting. So you can view all your most important data, all in one place.

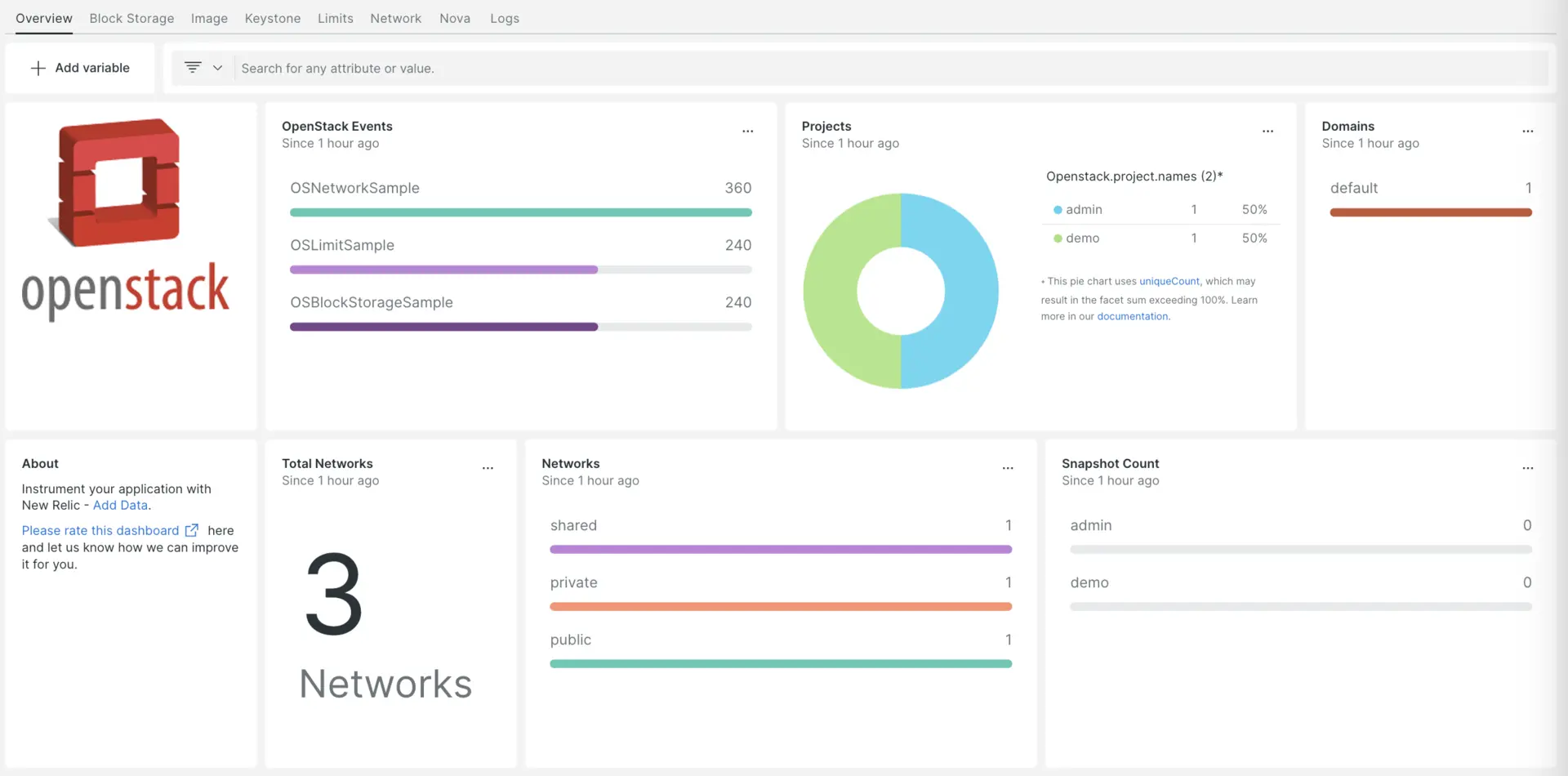

After setting up our Openstack Controller, you can install a dashboard for your Openstack Controller metrics.

Install the infrastructure agent

To get data into New Relic, install our infrastructure agent. Our infrastructure agent collects and ingests data so you can keep track of your app's performance. The version should be 1.10.7 or higher to support NRI-Flex integration.

You can install the infrastructure agent two different ways:

- Our guided install is a CLI tool that inspects your system and installs the infrastructure agent alongside the application monitoring agent that best works for your system. To learn more about how our guided install works, check out our Guided install overview.

- If you'd rather install our infrastructure agent manually, you can follow a tutorial for manual installation for Linux, Windows, or macOS.

Configure nr1-openstack

Clone the repository

git clone https://github.com/newrelic-experimental/nr1-openstackUse this command to open the

openstack-agentdirectory present in the cloned repository.bash$cd nr1-openstack/openstack-agent/Rename the file

config/os-config.json.templatetoconfig/os-config.jsonand edit the file as mentioned belowUpdate

nr_agent_home, you need to enter the parent directory of the config/ folder from your cloned repository.Run the below command to see details of endpoint urls.

bash$openstack catalog listTo get the value of

keystone_url, copy the endpoint url forname: keystoneandtype: identity.To find the

keystone_api_version, run the below command.bash$curl -i http://<HOST_IP>/identifyTo find the

glance_api_version, run the below command.bash$curl -i http://<HOST_IP>/imageUpdate

ADMIN_PASSWORDin the below configuration file, by using your openstack login password.

Once your json file is updated, it will look like this:

{"config": {"nr_agent_home": "nr_agent_home","keystone_url": "http://HOST_IP/identity","keystone_api_version": "v3","nova_api_version": "v2.1","cinder_api_version": "v3","neutron_api_version": "v2.0","glance_api_version": "v2.16","ssl_verify": false,"add_lists": true,"user": {"password": "ADMIN_PASSWORD","name": "admin","domain": {"id": "default"}},"service_types": {"keystone": {"enabled": true,"component_name": "identity","metrics": ["openstack.identity.credentials_count","openstack.identity.domains_count","openstack.identity.groups_count","openstack.identity.policies_count","openstack.identity.projects_count","openstack.identity.regions_count","openstack.identity.roles_count","openstack.identity.services_count","openstack.identity.users_count","openstack.identity.floatingips_count","openstack.identity.routers_count","openstack.identity.security_groups_count","openstack.identity.subnets_count"]},"hypervisors": {"enabled": true,"component_name": "nova","metrics": ["openstack.nova.hypervisor.current_workload","openstack.nova.hypervisor.disk_available_least","openstack.nova.hypervisor.free_disk_gb","openstack.nova.hypervisor.free_ram_mb","openstack.nova.hypervisor.host_ip","openstack.nova.hypervisor.hypervisor_hostname","openstack.nova.hypervisor.hypervisor_type","openstack.nova.hypervisor.hypervisor_version","openstack.nova.hypervisor.id","openstack.nova.hypervisor.load_average_1","openstack.nova.hypervisor.load_average_15","openstack.nova.hypervisor.load_average_5","openstack.nova.hypervisor.local_gb","openstack.nova.hypervisor.local_gb_used","openstack.nova.hypervisor.memory_mb","openstack.nova.hypervisor.memory_mb_used","openstack.nova.hypervisor.running_vms","openstack.nova.hypervisor.service.disabled_reason","openstack.nova.hypervisor.service.host","openstack.nova.hypervisor.service.id","openstack.nova.hypervisor.state","openstack.nova.hypervisor.status","openstack.nova.hypervisor.uptime","openstack.nova.hypervisor.user_count","openstack.nova.hypervisor.vcpus","openstack.nova.hypervisor.vcpus_used"]},"resource_providers": {"enabled": true,"component_name": "placement","metrics": ["openstack.placement.inventories.DISK_GB.allocation_ratio","openstack.placement.inventories.DISK_GB.max_unit","openstack.placement.inventories.DISK_GB.min_unit","openstack.placement.inventories.DISK_GB.reserved","openstack.placement.inventories.DISK_GB.step_size","openstack.placement.inventories.DISK_GB.total","openstack.placement.inventories.MEMORY_MB.allocation_ratio","openstack.placement.inventories.MEMORY_MB.max_unit","openstack.placement.inventories.MEMORY_MB.min_unit","openstack.placement.inventories.MEMORY_MB.reserved","openstack.placement.inventories.MEMORY_MB.step_size","openstack.placement.inventories.MEMORY_MB.total","openstack.placement.inventories.VCPU.allocation_ratio","openstack.placement.inventories.VCPU.max_unit","openstack.placement.inventories.VCPU.min_unit","openstack.placement.inventories.VCPU.reserved","openstack.placement.inventories.VCPU.step_size","openstack.placement.inventories.VCPU.total","openstack.placement.resource.name","openstack.placement.resource.uuid","openstack.placement.resource_provider_generation","openstack.placement.usages.DISK_GB","openstack.placement.usages.MEMORY_MB","openstack.placement.usages.VCPU"]},"images": {"enabled": true,"component_name": "glance","metrics": ["openstack.glance.image.AppCode","openstack.glance.image.Name","openstack.glance.image.ServiceName","openstack.glance.image.ServiceOwner","openstack.glance.image.signature_verified","openstack.glance.image.image_type","openstack.glance.image.checksum","openstack.glance.image.container_format","openstack.glance.image.created_at","openstack.glance.image.disk_format","openstack.glance.image.file","openstack.glance.image.hw_rng_model","openstack.glance.image.id","openstack.glance.image.min_disk","openstack.glance.image.min_ram","openstack.glance.image.name","openstack.glance.image.os_hash_algo","openstack.glance.image.os_hash_value","openstack.glance.image.os_hidden","openstack.glance.image.owner","openstack.glance.image.owner_specified.openstack.md5","openstack.glance.image.owner_specified.openstack.object","openstack.glance.image.owner_specified.openstack.sha256","openstack.glance.image.protected","openstack.glance.image.schema","openstack.glance.image.self","openstack.glance.image.size","openstack.glance.image.status","openstack.glance.image.tags","openstack.glance.image.updated_at","openstack.glance.image.virtual_size","openstack.glance.image.visibility"]},"nova": {"enabled": true,"component_name": "compute","metrics": ["openstack.compute.agents_count","openstack.compute.aggregates_count","openstack.compute.flavors_count","openstack.compute.keypairs_count","openstack.compute.services_count"]},"block_storage": {"enabled": true,"component_name": "cinder","metrics": ["openstack.cinder.limits.maxTotalBackupGigabytes","openstack.cinder.limits.maxTotalBackups","openstack.cinder.limits.maxTotalSnapshots","openstack.cinder.limits.maxTotalVolumeGigabytes","openstack.cinder.limits.maxTotalVolumes","openstack.cinder.limits.totalBackupGigabytesUsed","openstack.cinder.limits.totalBackupsUsed","openstack.cinder.limits.totalGigabytesUsed","openstack.cinder.limits.totalSnapshotsUsed","openstack.cinder.limits.totalVolumesUsed","openstack.cinder.snapshots.count","openstack.cinder.snapshots.size","openstack.cinder.volumes.count","openstack.cinder.volumes.size"]},"limits": {"enabled": true,"component_name": "nova","metrics": ["openstack.nova.limits.maxImageMeta","openstack.nova.limits.maxPersonality","openstack.nova.limits.maxPersonalitySize","openstack.nova.limits.maxSecurityGroupRules","openstack.nova.limits.maxSecurityGroups","openstack.nova.limits.maxServerGroupMembers","openstack.nova.limits.maxServerGroups","openstack.nova.limits.maxServerMeta","openstack.nova.limits.maxTotalCores","openstack.nova.limits.maxTotalFloatingIps","openstack.nova.limits.maxTotalInstances","openstack.nova.limits.maxTotalKeypairs","openstack.nova.limits.maxTotalRAMSize","openstack.nova.limits.totalCoresUsed","openstack.nova.limits.totalFloatingIpsUsed","openstack.nova.limits.totalInstancesUsed","openstack.nova.limits.totalRAMUsed","openstack.nova.limits.totalSecurityGroupsUsed","openstack.nova.limits.totalServerGroupsUsed"]},"servers": {"enabled": true,"component_name": "nova","metrics": ["openstack.nova.server.cpu0_time","openstack.nova.server.cpu1_time","openstack.nova.server.cpu2_time","openstack.nova.server.cpu3_time","openstack.nova.server.cpu4_time","openstack.nova.server.cpu5_time","openstack.nova.server.cpu6_time","openstack.nova.server.cpu7_time","openstack.nova.server.hypervisor_name","openstack.nova.server.id","openstack.nova.server.memory","openstack.nova.server.memory-actual","openstack.nova.server.memory-available","openstack.nova.server.memory-disk_caches","openstack.nova.server.memory-hugetlb_pgalloc","openstack.nova.server.memory-hugetlb_pgfail","openstack.nova.server.memory-last_update","openstack.nova.server.memory-major_fault","openstack.nova.server.memory-minor_fault","openstack.nova.server.memory-rss","openstack.nova.server.memory-swap_in","openstack.nova.server.memory-swap_out","openstack.nova.server.memory-unused","openstack.nova.server.memory-usable","openstack.nova.server.name","openstack.nova.server.rx","openstack.nova.server.rx_drop","openstack.nova.server.rx_errors","openstack.nova.server.rx_packets","openstack.nova.server.tx","openstack.nova.server.tx_drop","openstack.nova.server.tx_errors","openstack.nova.server.tx_packets","openstack.nova.server.memory-hugetlb_pgfail","openstack.nova.server.vda_errors","openstack.nova.server.vda_read","openstack.nova.server.vda_read_req","openstack.nova.server.vda_write","openstack.nova.server.vda_write_req","openstack.nova.server.vdb_errors","openstack.nova.server.vdb_read","openstack.nova.server.vdb_read_req","openstack.nova.server.vdb_write","openstack.nova.server.vdb_write_req","openstack.nova.server.vdc_errors","openstack.nova.server.vdc_read","openstack.nova.server.vdc_read_req","openstack.nova.server.vdc_write","openstack.nova.server.vdc_write_req","openstack.nova.server.vdd_errors","openstack.nova.server.vdd_read","openstack.nova.server.vdd_read_req","openstack.nova.server.vdd_write","openstack.nova.server.vdd_write_req","openstack.nova.server.vde_errors","openstack.nova.server.vde_read","openstack.nova.server.vde_read_req","openstack.nova.server.vde_write","openstack.nova.server.vde_write_req"]},"networks": {"enabled": true,"component_name": "neutron","metrics": ["openstack.neutron.network.admin_state_up","openstack.neutron.network.created_at","openstack.neutron.network.description","openstack.neutron.network.floatingips_count","openstack.neutron.network.id","openstack.neutron.network.ipv4_address_scope","openstack.neutron.network.ipv6_address_scope","openstack.neutron.network.is_default","openstack.neutron.network.l2_adjacency","openstack.neutron.network.mtu","openstack.neutron.network.name","openstack.neutron.network.port_security_enabled","openstack.neutron.network.project_id","openstack.neutron.network.provider:network_type","openstack.neutron.network.provider:physical_network","openstack.neutron.network.provider:segmentation_id","openstack.neutron.network.qos_policy_id","openstack.neutron.network.revision_number","openstack.neutron.network.router:external","openstack.neutron.network.routers_count","openstack.neutron.network.security_groups_count","openstack.neutron.network.shared","openstack.neutron.network.status","openstack.neutron.network.subnets_count","openstack.neutron.network.tenant_id","openstack.neutron.network.updated_at"]}},"logging": {"logger_name": "nr.os.mon","log_file_name": "nr_openstack_agent.log","log_level": "WARNING","formatter": "%(asctime)-15s | %(name)-18s | %(process)d | %(levelname)-8s | %(threadName)s | %(funcName)-27s | %(lineno)04d | %(message)s"}}}Use the below command to give execute permission for the scripts folder (scripts/flex-osmetrics.sh will be invoked by New Relic Infrastructure Agent)

bash$chmod +x scripts/flex-osmetrics.shYou could disable the capture of any resources by setting enabled to false for that resource in config/os-config.json.

Configuring nri-flex for openstack

Once you’ve installed the infrastructure agent on your host. nri-flex binary is also installed with it.

To create a flex configuration files follow these steps:

Forward Openstack Controller logs to New Relic

You can use our log forwarding to forward Openstack Controller logs to New Relic.

On Linux machines, your log file named logging.yml should be present in this path:

$cd /etc/newrelic-infra/logging.d/Add the below script to the logging.yml file to send Openstack Controller logs to New Relic.

logs: - name: openstack.log file: <nr1-openstack-DIRECTORY>/openstack-agent/logs/nr_openstack_agent.log attributes: logtype: openstack_logRestart the New Relic infrastructure agent

Before you can start reading your data, use the instructions in our infrastructure agent docs to restart your infrastructure agent.

$sudo systemctl restart newrelic-infra.serviceMonitor your application

You can choose our pre-built dashboard template named Openstack Controller to monitor your Openstack application metrics.

- Go to one.newrelic.com and click on + Integrations & Agents.

- Click on the Dashboards tab.

- In the search box, type

Openstack Controller. - When you see our pre-build dashboard, click on it to install it in your account.

Once your application is integrated by following the above steps, the dashboard should display metrics.

To instrument the OpenStack quickstart and to see metrics and alerts, you can also follow our OpenStack Controller quickstart page that has an Install now button.

Here are some example queries:

Example: view the count of the event types

select count(*) from OSBlockStorageSample, OSLimitSample, OSNetworkSample, OSResourceProviderSample, OSImageSample, OSKeystoneSample, OSNovaSample since 10 minutes ago facet eventType() timeseriesWhat's next?

To learn more about building NRQL queries and generating dashboards, check out these docs:

- Introduction to the query builder to create basic and advanced queries.

- Introduction to dashboards to customize your dashboard and carry out different actions.

- Manage your dashboard to adjust your display mode, or to add more content to your dashboard.