Snowflake 통합을 통해 쿼리 성능, 스토리지 시스템 상태, 창고 상태, 청구 정보 등 다양한 측면에 대한 포괄적인 데이터를 수집할 수 있습니다.

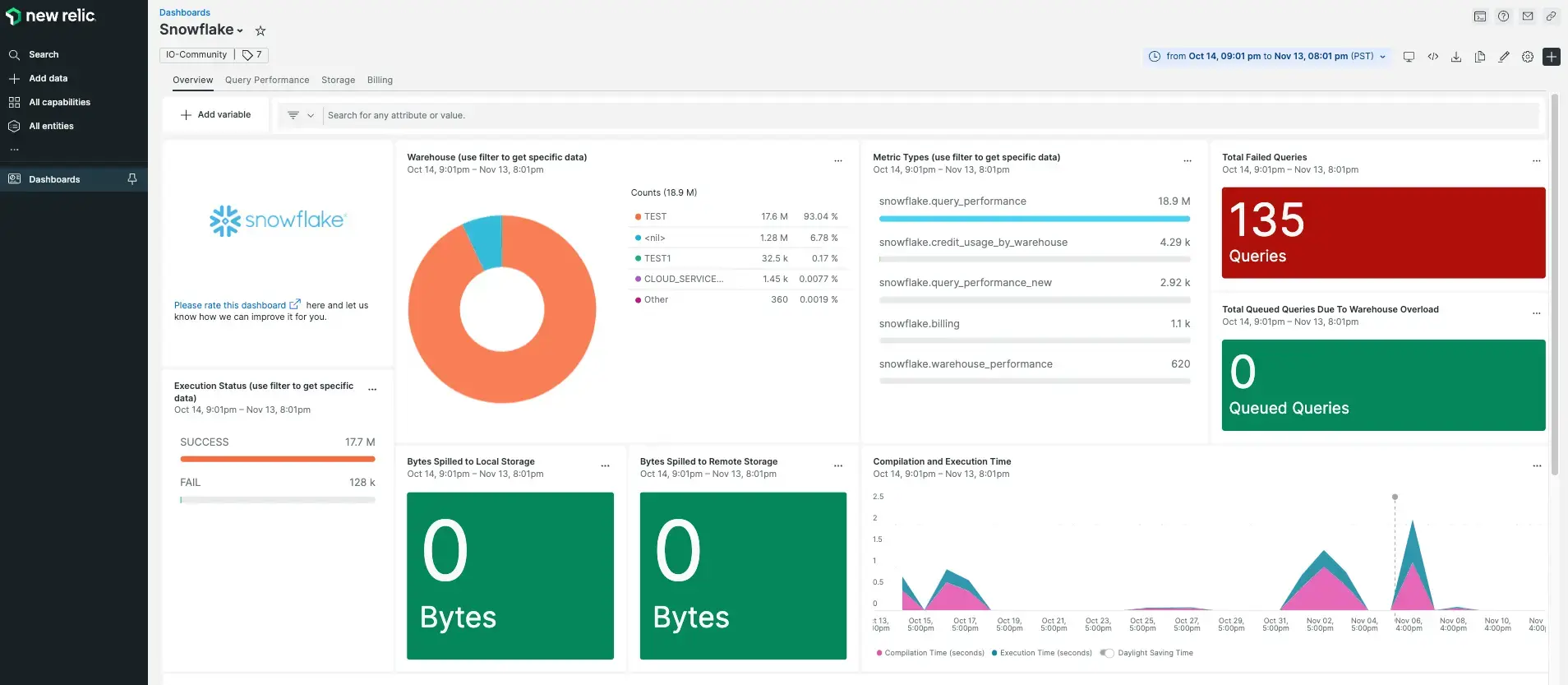

New Relic과 Snowflake 통합을 설정한 후 즉시 사용 가능한 것과 같은 대시보드에서 데이터를 확인하십시오.

인프라 에이전트 설치

Snowflake 통합을 사용하려면 동일한 호스트에 인프라 에이전트도 설치 해야 합니다. 클라이언트 에이전트는 호스트 자체를 모니터링하는 반면, 다음 단계에서 설치하게 될 통합은 Snowflake 관련 데이터로 모니터링을 확장합니다.

Snowflake 지표 설정

아래 명령어를 실행하여 Snowflake 메트릭을 JSON 형식으로 저장하면 nri-flex에서 이를 읽을 수 있습니다. ACCOUNT, USERNAME, SNOWSQL_PWD 를 적절히 수정하십시오.

$# Run the below command as a 1 minute cronjob$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT SERVICE_TYPE, NAME, AVG(CREDITS_USED_COMPUTE) AS "CREDITS_USED_COMPUTE_AVERAGE", SUM(CREDITS_USED_COMPUTE) AS "CREDITS_USED_COMPUTE_SUM", AVG(CREDITS_USED_CLOUD_SERVICES) AS "CREDITS_USED_CLOUD_SERVICES_AVERAGE", SUM(CREDITS_USED_CLOUD_SERVICES) AS "CREDITS_USED_CLOUD_SERVICES_SUM", AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."METERING_HISTORY" WHERE start_time >= DATE_TRUNC(day, CURRENT_DATE()) GROUP BY 1, 2;' > /tmp/snowflake-account-metering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, AVG(AVG_RUNNING) AS "RUNNING_AVERAGE", AVG(AVG_QUEUED_LOAD) AS "QUEUED_LOAD_AVERAGE", AVG(AVG_QUEUED_PROVISIONING) AS "QUEUED_PROVISIONING_AVERAGE", AVG(AVG_BLOCKED) AS "BLOCKED_AVERAGE" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_LOAD_HISTORY" GROUP BY 1;' > /tmp/snowflake-warehouse-load-history-metrics.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, avg(CREDITS_USED_COMPUTE) as "CREDITS_USED_COMPUTE_AVERAGE", sum(CREDITS_USED_COMPUTE) as "CREDITS_USED_COMPUTE_SUM", avg(CREDITS_USED_CLOUD_SERVICES) as "CREDITS_USED_CLOUD_SERVICES_AVERAGE", sum(CREDITS_USED_CLOUD_SERVICES) as "CREDITS_USED_CLOUD_SERVICES_SUM", avg(CREDITS_USED) as "CREDITS_USED_AVERAGE", sum(CREDITS_USED) as "CREDITS_USED_SUM" from "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_METERING_HISTORY" group by 1;' > /tmp/snowflake-warehouse-metering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT table_name, table_schema, avg(ACTIVE_BYTES) as "ACTIVE_BYTES_AVERAGE", avg(TIME_TRAVEL_BYTES) as "TIME_TRAVEL_BYTES_AVERAGE", avg(FAILSAFE_BYTES) as "FAILSAFE_BYTES_AVERAGE", avg(RETAINED_FOR_CLONE_BYTES) as "RETAINED_FOR_CLONE_BYTES_AVERAGE" from "SNOWFLAKE"."ACCOUNT_USAGE"."TABLE_STORAGE_METRICS" group by 1, 2;' > /tmp/snowflake-table-storage-metrics.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT STORAGE_BYTES, STAGE_BYTES, FAILSAFE_BYTES FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STORAGE_USAGE" ORDER BY USAGE_DATE DESC LIMIT 1;' > /tmp/snowflake-storage-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT USAGE_DATE, AVG(AVERAGE_STAGE_BYTES) FROM "SNOWFLAKE"."ACCOUNT_USAGE"."STAGE_STORAGE_USAGE_HISTORY" GROUP BY USAGE_DATE;' > /tmp/snowflake-stage-storage-usage-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT DATABASE_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_AVERAGE", SUM(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."REPLICATION_USAGE_HISTORY" GROUP BY DATABASE_NAME;' > /tmp/snowflake-replication-usage-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT QUERY_TYPE, WAREHOUSE_NAME, DATABASE_NAME, SCHEMA_NAME, AVG(EXECUTION_TIME) AS "EXECUTION_TIME_AVERAGE", AVG(COMPILATION_TIME) AS "COMPILATION_TIME_AVERAGE", AVG(BYTES_SCANNED) AS "BYTES_SCANNED_AVERAGE", AVG(BYTES_WRITTEN) AS "BYTES_WRITTEN_AVERAGE", AVG(BYTES_DELETED) AS "BYTES_DELETED_AVERAGE", AVG(BYTES_SPILLED_TO_LOCAL_STORAGE) AS "BYTES_SPILLED_TO_LOCAL_STORAGE_AVERAGE", AVG(BYTES_SPILLED_TO_REMOTE_STORAGE) AS "BYTES_SPILLED_TO_REMOTE_STORAGE_AVERAGE" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."QUERY_HISTORY" GROUP BY QUERY_TYPE, WAREHOUSE_NAME, DATABASE_NAME, SCHEMA_NAME;' > /tmp/snowflake-query-history.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT PIPE_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(BYTES_INSERTED) AS "BYTES_INSERTED_AVERAGE", SUM(BYTES_INSERTED) AS "BYTES_INSERTED_SUM", AVG(FILES_INSERTED) AS "FILES_INSERTED_AVERAGE", SUM(FILES_INSERTED) AS "FILES_INSERTED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."PIPE_USAGE_HISTORY" GROUP BY PIPE_NAME;' > /tmp/snowflake-pipe-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT QUERY_ID, QUERY_TEXT, (EXECUTION_TIME / 60000) AS EXEC_TIME, WAREHOUSE_NAME, USER_NAME, EXECUTION_STATUS FROM "SNOWFLAKE"."ACCOUNT_USAGE"."QUERY_HISTORY" WHERE EXECUTION_STATUS = '\''SUCCESS'\'' ORDER BY EXECUTION_TIME DESC;' > /tmp/snowflake-longest-queries.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT EVENT_ID, EVENT_TIMESTAMP, EVENT_TYPE, REPORTED_CLIENT_TYPE, REPORTED_CLIENT_VERSION, FIRST_AUTHENTICATION_FACTOR, SECOND_AUTHENTICATION_FACTOR, IS_SUCCESS, ERROR_CODE, ERROR_MESSAGE FROM "SNOWFLAKE"."ACCOUNT_USAGE"."LOGIN_HISTORY" WHERE IS_SUCCESS = '\''NO'\'';' > /tmp/snowflake-login-failures.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT DATABASE_NAME, AVERAGE_DATABASE_BYTES, AVERAGE_FAILSAFE_BYTES FROM "SNOWFLAKE"."ACCOUNT_USAGE"."DATABASE_STORAGE_USAGE_HISTORY" ORDER BY USAGE_DATE DESC LIMIT 1;' > /tmp/snowflake-database-storage-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT SOURCE_CLOUD, SOURCE_REGION, TARGET_CLOUD, TARGET_REGION, TRANSFER_TYPE, AVG(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_AVERAGE", SUM(BYTES_TRANSFERRED) AS "BYTES_TRANSFERRED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."DATA_TRANSFER_HISTORY" GROUP BY 1, 2, 3, 4, 5;' > /tmp/snowflake-data-transfer-usage.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT WAREHOUSE_NAME, SUM(CREDITS_USED) AS TOTAL_CREDITS_USED FROM "SNOWFLAKE"."ACCOUNT_USAGE"."WAREHOUSE_METERING_HISTORY" GROUP BY 1 ORDER BY 2 DESC;' > /tmp/snowflake-credit-usage-by-warehouse.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'SELECT TABLE_NAME, DATABASE_NAME, SCHEMA_NAME, AVG(CREDITS_USED) AS "CREDITS_USED_AVERAGE", SUM(CREDITS_USED) AS "CREDITS_USED_SUM", AVG(NUM_BYTES_RECLUSTERED) AS "BYTES_RECLUSTERED_AVERAGE", SUM(NUM_BYTES_RECLUSTERED) AS "BYTES_RECLUSTERED_SUM", AVG(NUM_ROWS_RECLUSTERED) AS "ROWS_RECLUSTERED_AVERAGE", SUM(NUM_ROWS_RECLUSTERED) AS "ROWS_RECLUSTERED_SUM" FROM "SNOWFLAKE"."ACCOUNT_USAGE"."AUTOMATIC_CLUSTERING_HISTORY" GROUP BY 1, 2, 3;' > /tmp/snowflake-automatic-clustering.json$SNOWSQL_PWD='Replaceme' snowsql -o output_format=json -o remove_comments=true -o header=true -o timing=false -o friendly=false -a <ACCOUNT> -u <USERNAME> -q 'select USER_NAME,EVENT_TYPE,IS_SUCCESS,ERROR_CODE,ERROR_MESSAGE,FIRST_AUTHENTICATION_FACTOR,SECOND_AUTHENTICATION_FACTOR from "SNOWFLAKE"."ACCOUNT_USAGE"."LOGIN_HISTORY";' > /tmp/snowflake-account-details.jsonnri-flex와 Snowflake 통합 활성화

Snowflake 통합을 설정하려면 다음 단계를 따르세요.

통합 디렉터리에

nri-snowflake-config.yml이라는 파일을 만듭니다.bash$touch /etc/newrelic-infra/integrations.d/nri-snowflake-config.yml에이전트가 Snowflake 데이터를 캡처할 수 있도록 하려면 다음 스니펫을

nri-snowflake-config.yml파일에 추가하세요.---integrations:- name: nri-flexinterval: 30sconfig:name: snowflakeAccountMeteringapis:- name: snowflakeAccountMeteringfile: /tmp/snowflake-account-metering.json- name: nri-flexinterval: 30sconfig:name: snowflakeWarehouseLoadHistoryapis:- name: snowflakeWarehouseLoadHistoryfile: /tmp/snowflake-warehouse-load-history-metrics.json- name: nri-flexinterval: 30sconfig:name: snowflakeWarehouseMeteringapis:- name: snowflakeWarehouseMeteringfile: /tmp/snowflake-warehouse-metering.json- name: nri-flexinterval: 30sconfig:name: snowflakeTableStorageapis:- name: snowflakeTableStoragefile: /tmp/snowflake-table-storage-metrics.json- name: nri-flexinterval: 30sconfig:name: snowflakeStageStorageUsageapis:- name: snowflakeStageStorageUsagefile: /tmp/snowflake-stage-storage-usage-history.json- name: nri-flexinterval: 30sconfig:name: snowflakeReplicationUsgaeapis:- name: snowflakeReplicationUsgaefile: /tmp/snowflake-replication-usage-history.json- name: nri-flexinterval: 30sconfig:name: snowflakeQueryHistoryapis:- name: snowflakeQueryHistoryfile: /tmp/snowflake-query-history.json- name: nri-flexinterval: 30sconfig:name: snowflakePipeUsageapis:- name: snowflakePipeUsagefile: /tmp/snowflake-pipe-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeLongestQueriesapis:- name: snowflakeLongestQueriesfile: /tmp/snowflake-longest-queries.json- name: nri-flexinterval: 30sconfig:name: snowflakeLoginFailureapis:- name: snowflakeLoginFailurefile: /tmp/snowflake-login-failures.json- name: nri-flexinterval: 30sconfig:name: snowflakeDatabaseStorageUsageapis:- name: snowflakeDatabaseStorageUsagefile: /tmp/snowflake-database-storage-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeDataTransferUsageapis:- name: snowflakeDataTransferUsagefile: /tmp/snowflake-data-transfer-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeCreditUsageByWarehouseapis:- name: snowflakeCreditUsageByWarehousefile: /tmp/snowflake-credit-usage-by-warehouse.json- name: nri-flexinterval: 30sconfig:name: snowflakeAutomaticClusteringapis:- name: snowflakeAutomaticClusteringfile: /tmp/snowflake-automatic-clustering.json- name: nri-flexinterval: 30sconfig:name: snowflakeStorageUsageapis:- name: snowflakeStorageUsagefile: /tmp/snowflake-storage-usage.json- name: nri-flexinterval: 30sconfig:name: snowflakeAccountDetailsapis:- name: snowflakeAccountDetailsfile: /tmp/snowflake-account-details.json

New Relic 인프라 에이전트 다시 시작

인프라 에이전트를 다시 시작하십시오.

$sudo systemctl restart newrelic-infra.service몇 분 안에 애플리케이션이 메트릭을 one.newrelic.com으로 보냅니다.

데이터 찾기

Snowflake 이라는 사전 제작된 대시보드 템플릿을 선택하여 Snowflake의 그래픽 지표를 모니터링할 수 있습니다. 사전 구축된 대시보드 템플릿을 사용하려면 다음 단계를 따르세요.

one.newrelic.com 에서, + Add data 페이지로 이동하세요.

클릭 Dashboards.

검색창에

Snowflake를 입력합니다.Snowflake 대시보드가 나타나야 합니다. 클릭해서 설치하세요

귀하의 Snowflake 대시보드는 맞춤형 대시보드로 간주되며 Dashboards UI 에서 찾을 수 있습니다. 대시보드 사용 및 편집에 대한 문서는 대시보드 문서 를 참조하세요.

다음은 Snowflake 지표를 확인하는 NRQL 쿼리입니다.

SELECT * FROM snowflakeAccountSample

다음은 뭐지?

NRQL 쿼리 작성 및 대시보드 생성에 대해 자세히 알아보려면 다음 문서를 확인하세요.

- 기본 및 고급 쿼리를 생성 하기 위한 쿼리 빌더 소개

- 대시보드를 사용자 지정하고 다양한 작업을 수행하기 위한 대시보드 소개

- 대시보드를 관리하여 대시 보드 표시 모드를 조정하거나 대시보드에 더 많은 콘텐츠를 추가합니다.