O monitoramento do Kafka fornece visibilidade em tempo real de seus clusters Apache Kafka para garantir o streaming de dados confiável e evitar tempo de inatividade dispendioso em sistemas distribuídos. Usando o OpenTelemetry Collector, você obtém monitoramento abrangente por meio de uma abordagem flexível e independente de fornecedor que funciona em ambientes auto-hospedados e Kubernetes com Strimzi.

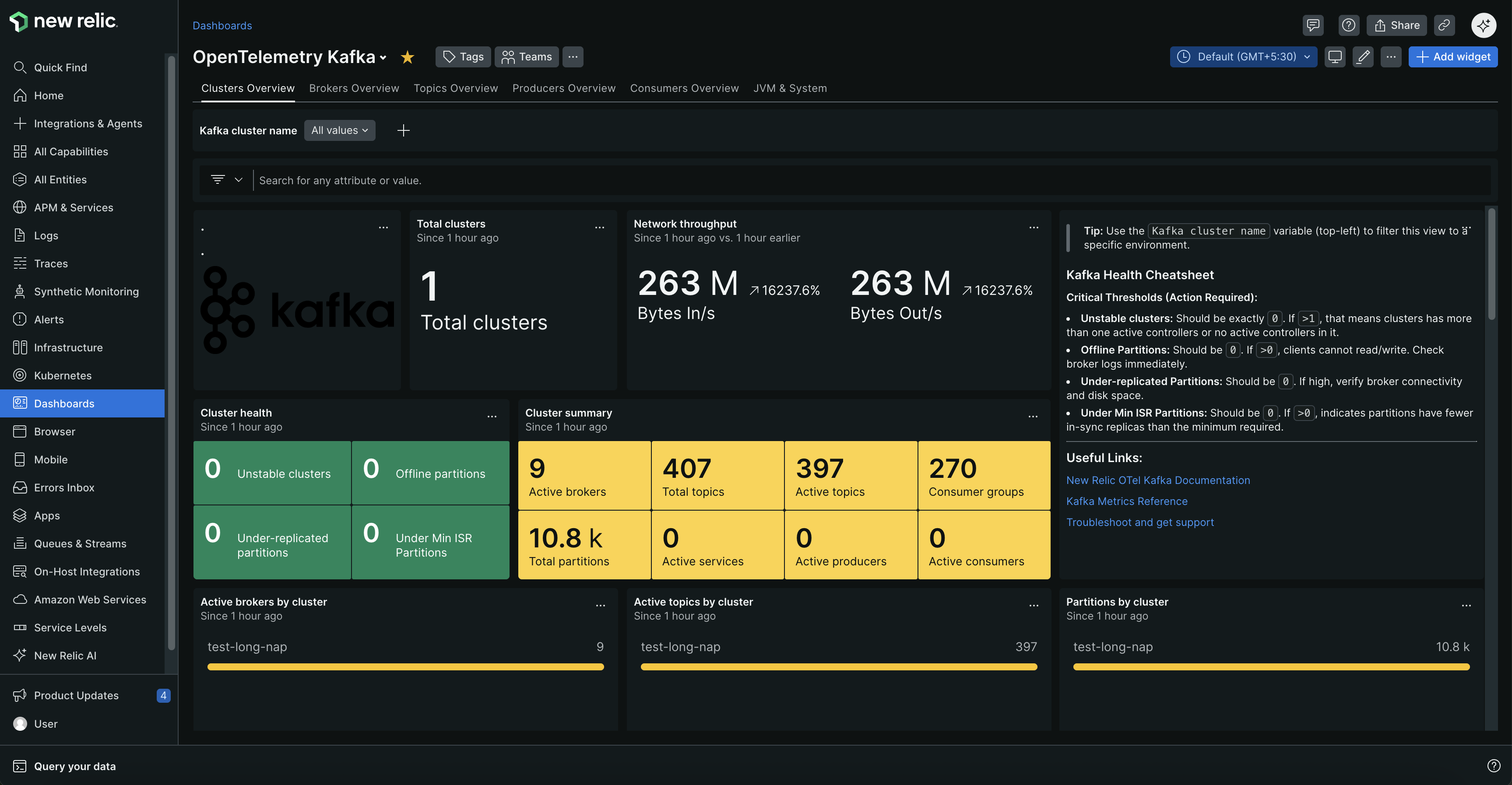

Monitore seus clusters Kafka com dashboards abrangentes que mostram a integridade do cluster, o status do broker, métricas de tópicos e o desempenho do grupo de consumidores.

Por que monitorar o Kafka?

- Evite interrupções - Receba alertas para falhas de broker, partições sub-replicadas e tópicos offline antes que causem tempo de inatividade

- Otimize o desempenho - Identifique o atraso do consumidor, produtores lentos e gargalos de rede que afetam a velocidade de processamento de dados

- Planejar a capacidade - Acompanhe o uso de recursos, taxas de mensagens e contagens de conexões para escalar proativamente

- Garantir a integridade dos dados - Monitore a integridade da replicação e o balanceamento de partições para evitar a perda de dados

Caso de uso comum

Se você está transmitindo transações financeiras, processando dados de sensores IoT ou lidando com a comunicação de microsserviços, o monitoramento do Kafka ajuda você a detectar problemas antes que eles afetem seus negócios. Seja alertado quando os picos de atraso do consumidor ameaçarem dashboards em tempo real, quando as falhas do broker colocarem em risco a perda de dados ou quando os gargalos da rede retardarem os pipelines de dados críticos. Este monitoramento é essencial para plataformas de e-commerce, sistemas de análise em tempo real e qualquer aplicativo em que atrasos ou falhas na entrega de mensagens possam afetar a experiência do usuário ou as operações comerciais.

Iniciar

Escolha seu ambiente Kafka para começar a monitorar. Cada guia de configuração inclui pré-requisitos, etapas de configuração e dicas de solução de problemas.

Como funciona

O OpenTelemetry Collector se conecta ao seu cluster Kafka usando dois receptores especializados:

Coleta de dados:

- O receptor de métricas Kafka conecta-se à porta de bootstrap do Kafka para integridade do cluster, atraso do consumidor, métricas de tópico e status da partição

- O receptor JMX se conecta às portas JMX do broker para métricas de desempenho, dados da JVM e insights operacionais detalhados

Fluxo de dados:

- Os coletores reúnem métricas de ambos os receptores simultaneamente

- Os dados são processados, enriquecidos e agrupados para transmissão eficiente

- As métricas são exportadas para o New Relic via exportador OTLP

- O New Relic cria entidades e preenche dashboards automaticamente

O que você obtém: As principais métricas incluem atraso do consumidor, integridade do broker, taxas de solicitação, taxa de transferência de rede, status de replicação de partição e utilização de recursos.

Para obter nomes de métricas completos, descrições e recomendações de alerta, consulte Referência de métricas do Kafka.

Opcional: Adicionar monitoramento no nível do aplicativo

A configuração de monitoramento acima rastreia a integridade e o desempenho do seu cluster Kafka. Para obter uma imagem completa de como os dados fluem pelo seu sistema, você também pode monitorar os aplicativos que enviam e recebem mensagens do Kafka.

O monitoramento de aplicativos adiciona:

- Latências de solicitação de seus aplicativos para o Kafka

- Métricas de throughput no nível do aplicativo

- Taxas de erro e rastreamentos distribuídos

- Visibilidade completa de produtores → corretores → consumidores

Configuração rápida: Use o Agente OpenTelemetry Java para instrumentação Kafka sem código. Para configuração avançada, consulte a documentação de instrumentação do Kafka.

Próximos passos

Pronto para começar a monitorar seus clusters Kafka?

Configure o monitoramento:

- Kafka auto-hospedado - Monitore o Kafka em execução em máquinas físicas ou virtuais

- Kubernetes com Strimzi - Monitore o Kafka implantado no Kubernetes

Após a configuração:

- Encontre e consulte seus dados - Navegue na interface do New Relic e escreva consultas NRQL

- Explore as métricas do Kafka - Referência completa de métricas com recomendações de alerta