This guide walks you through ideas and processes for automating your New Relic installation and configuration to maximize the value you're getting from observability. It's part of our series on observability maturity.

Overview

Observability as code is a term used to describe the process of automating the configuration of your observability tools, in a consistent, controlled, and automated way, to help you derive maximum value from your telemetry data. This resource will focus on doing this for your New Relic implementation.

Why should you use this guide?

As your infrastructure, application and service technologies evolve, their scale and complexity increase, increasing the volume of data collected from instrumentation tools (including New Relic), and the challenge associated with making sense of the data, bringing it into context, and driving actions off the back of it.

Using our observability as code methodology to automate the configuration of New Relic addresses this challenge, helping organizations to accelerate adoption, improve stability, and drive better governance.

This guide provides guidance on how to approach implementing observability as code, and offers good practice advice and reference examples to enable you to build and maintain your New Relic platform at speed and at scale. It leverages automation workflows and provisioning tools to allow organizations to scale observability best practices, while reducing manual effort and improving service delivery. A successful implementation of these ideas should deliver real value to both an IT organization itself and the businesses they support.

Desired outcomes

Reducing the costs and risks associated with implementing and maintaining an optimally configured observability solution in rapidly changing and large scale environments.

Specifically, some of the benefits of adopting observability as code practices are:

Repeatable, replicable, reusable

Managing New Relic resources through code means that they can easily be repeated, scaled, and updated in bulk. Leveraging a modular approach with provisioning tools like Terraform allow packaged sets of resources such as dashboards, alerts, and workloads to be quickly and easily shared and deployed, accelerating startup time and improving organization wide standards.

Reduced toil

The toil of creating and maintaining New Relic resources managed through code is significantly less than manually managing them via the user interface, especially when working at scale. Our interface lends itself well to discovery and testing, but changes to code-managed resources can be bulk applied, vastly reducing the time administering the resources. One common approach is to develop alerts and dashboards within the user interface and then, when they're considered mature enough, migrate them to code-managed resources.

Documentation and context

The huge variety of resources that can be configured in New Relic may make it difficult to understand, when looking at a single resource, why it's been created or configured as it has. Configuration via code allows for associated commenting and documentation that helps explain why certain choices may have been made, when, and by whom.

Auditable history

While it's possible to understand who made changes to New Relic resources via the NRAuditEvent event, this does not provide much background as to why the changes were made, what their previous state was, or who approved the changes. Managing resources via code in tandem with an automated-approval-based provisioning workflow allows for much clearer visibility of changes and improved governance while also providing methods for rollback and recovery.

Security

Observability as code allows for stricter controls over the use of API keys for managing New Relic resources. Security is improved by reducing the number of API keys in circulation and ensuring adequate governance is in place concerning their creation and dissemination. Dissuading users from using their own keys, especially within automated workflows, means the surface area for a key breach or unintended corruption is reduced.

Efficient delta changes

Provisioning tools like Terraform make it possible to make delta changes to existing resources. This makes updates quick and efficient as only resources attributes that need changing are changed, with minimal resource destruction and re-creation. This is important as it ensures that the GUIDs of resources such as dashboards and alerts are not changed on update.

React to external stimuli

Combining observability as code with automated workflows allows for New Relic resources to be created and amended as a result of external stimuli such as application deployments, infrastructure events, or any other data input. For example, you could automatically generate dashboards and alerts that compare key golden signal metrics between code version releases at deployment time.

Contextual ownership and packaging

Managing resources in code allows for related resources to be managed together. It's easier to comprehend and manage them in one place, in code, than it is when distributed across the user interface. For instance, this allows different teams to manage, view and maintain the resources within their sphere of influence and not have to hunt for resources they manage.

Disaster recovery

Occasionally mistakes happen, the wrong resource is updated or deleted. Recovering from these situations with manual resource management is difficult because it's not easy to know what existed before or even if the resource has been changed or lost at all. Observability as code helps protect from these issues by ensuring that any resource can be recreated or reset to the expected configuration. It also creates an opportunity to proactively detect configuration drift.

Speed of deployment

Observability as code accelerates the speed of deployment by allowing for a common set of resources to be easily shared amongst teams and used to bootstrap observability tooling. This is particularly evident in microservice architectures where similar application deployment architectures benefit from cookie-cutter module-based New Relic resources. Creating reusable centrally managed modules also helps to standardize common approaches to observability tooling.

Key performance indicators

The maturity of your observability as code deployment can be evaluated in several ways. Generally the more resources in an environment that are managed via code, the more mature the deployment. Here are some of the KPIs:

Prerequisites

Before embarking on an Observability as code implementation with New Relic, you should get acquainted with our fundamentals that are available from New Relic University.

You should also:

- Choose and have familiarity with provisioning tool such as Terraform

- Have access to a CI/CD platform that supports automation workflows

- Be familiar with the New Relic platform and API features

- Have determined a tagging and naming convention or strategy for your resources

- Decide on the asset granularity strategy for determining KPIs

- Have prepared service account API keys and a permissions model for applying changes from CI/CD tools

Establish current state

Before embarking on adopting observability-as-code practices you should evaluate the current state. You can leverage the maturity assessment concepts discussed above to determine how mature your environments and prioritize which environments to tackle first.

Improvement process

You may have decided to adopt observability-as-code with New Relic, but are unsure how to get started, or you want to avoid common dead ends and traps. Here we provide guides on good practice, advice, and reference examples to help you confidently adopt observability-as-code.

Team based resource isolation

Many teams may be involved in managing resources in a single New Relic accounts, or across multiple accounts. Our observability-as-code practice provides a way to more tightly control the isolation, access, and management of resources. Restricting write access to individuals and enforcing changes to be made via managed code allows teams to safely work in the same space without risking affecting each others' resources.

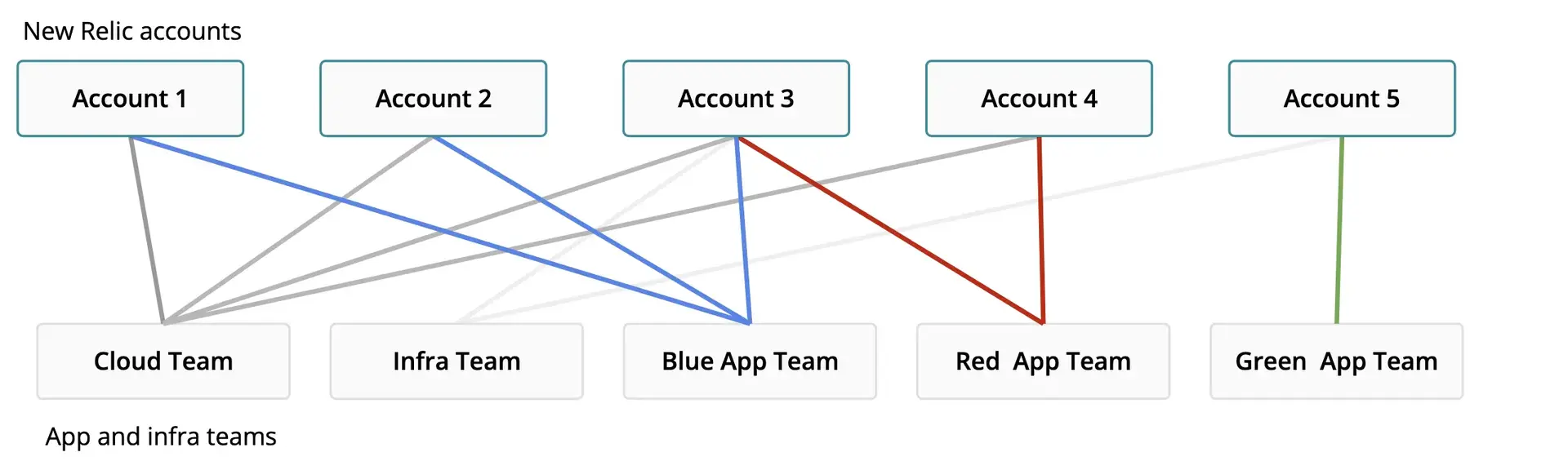

For example, you may have a shared infrastructure team that manages cloud infrastructure on behalf of multiple application teams that each monitor their applications in different New Relic accounts. This shared infrastructure team would manage their own New Relic resources within these accounts alongside the application teams' own resources. Restricting user write access and ensuring key resources are only managed via observability-as-code workflows allows the resources from the teams to live together in harmony and reduces the possibility of unauthorized or unintentional change.

This diagram illustrates how CI/CD pipelines for different teams may have access to manage isolated resources in multiple overlapping accounts.

API keys

Managing resources using our NerdGraph API or via provisioning tools such as Terraform requires a New Relic user key. User keys are associated with a specific user and inherit the permissions of that user.

Service accounts

Creating New Relic user keys against real human users can cause issues to automated pipelines. For example, if that user's permissions change as part of a team move, or the user leaves the organization, an automation pipeline that relies upon it could fail.

Consider creating "service account" users that are managed by a central management team that are specifically created for automation purposes. These teams can then generate and manage keys for dissemination to other implementing teams. Service accounts can be used to generate multiple keys ensuring that implementing teams only use their own key. Keys managed in this way are more easily audited and help ensure that permissions are set correctly and remain stable. Individuals should be encouraged not to use their own user keys except for development and testing.

Automated API key generation

New Relic user keys can be generated via NerdGraph, allowing for fully automated on-demand key provisioning. This could be used to automate generation of keys via a portal or service process flow.

Automation tools

We recommend using Terraform to manage the provisioning of your New Relic resources. Tools like Terraform allow you to configure resources in code without having to concern yourself with which APIs to call or how to maintain a record of what's been created.

New Relic actively maintains our own New Relic Terraform provider. Feature requests and issues can be submitted in our open source Terraform GitHub repository.

State management

When managing New Relic resources with Terraform it's important to keep a stable record of the Terraform state. Ideally state should be securely stored in a remote location, be version controlled and leverage state locking in order to ensure stability.

Identify managed resources

It's important that you're able to easily identify resources in New Relic that are code managed. This makes it possible not only to evaluate the maturity KPI but also helps users interacting with the UI to understand which resources are code-managed and that therefore shouldn't be changed manually.

It's good practice to develop a consistent organization-wide standard for tagging and naming resources that are managed in code. At the very least you should tag and identify that a resource is code managed. For example, you could add the tag codeManaged=true and perhaps a suffix or prefix to the resource name (for example, "Database performance summary [CM]") to help identify them. Additionally, you should consider tagging resources with further useful information, such as the owning team, the source of the resource, or code version, for example.

Dealing with large resource sets

Every resource configured in Terraform needs to be refreshed and evaluated to look for changes when new configurations are applied. As the amount of configurations grow, the list of resources to check against increases. Each check requires an API call and therefore large configurations may take some time to complete and may encounter API limits if too many requests are made in parallel. One approach is to reduce the number of resources managed within a single state, breaking down the configuration into parts. Also reducing the parallelism of Terraform requests can alleviate API limiting.

Take a modular approach

Modules are the main way to package and reuse resource configurations with Terraform and can be leveraged to package together any number of New Relic resources. Packaging like this allows for parameter driven deployments. For example, you may have a module that takes an application name and builds an overview dashboard, golden signal alerts, and synthetic journey, all in one operation.

Terraform modules can be published to remote registries allowing teams to share and consume resource packages developed by other teams. This provides opportunities to implement standardization and roll out version controlled changes and improvements easily.

Implementation reference

Automation workflows

Automation workflows are essential for scaling observability-as-code to teams and organizations. There are many CI/CD tools and services available that can drive Terraform workflows. These allow configuration changes to be discussed and approved while also providing an auditable trail of changes.

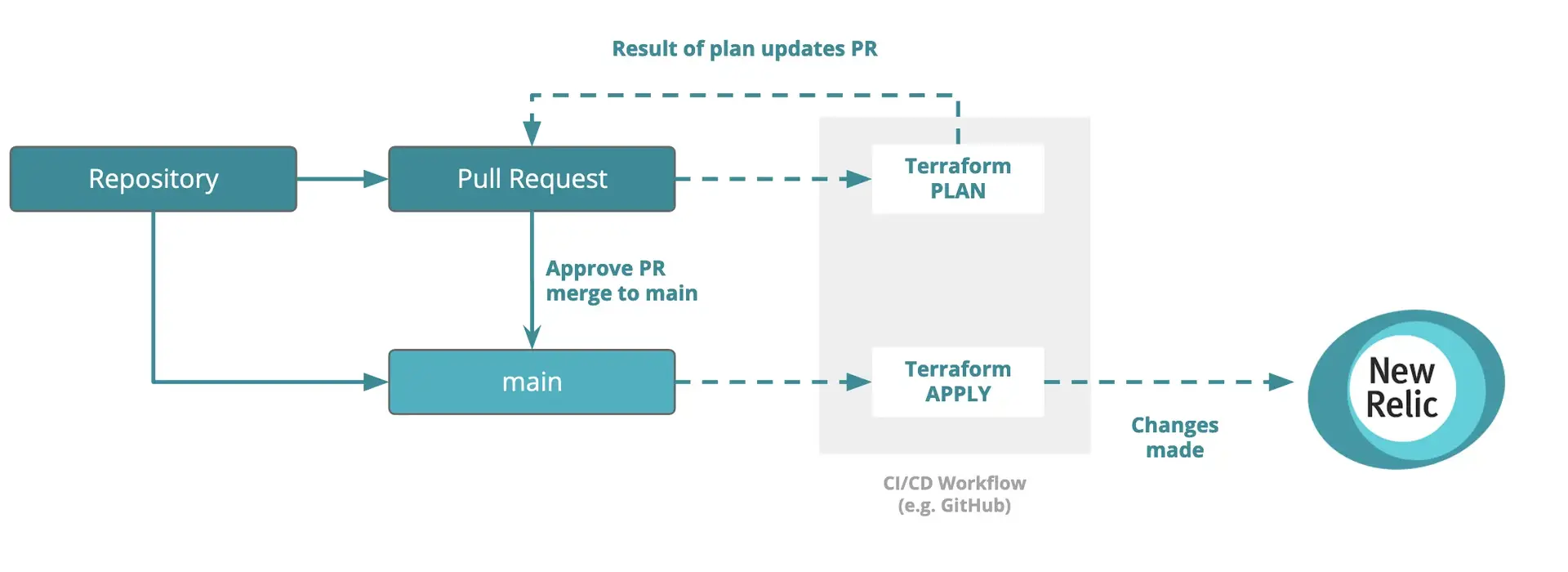

We recommend adopting a Terraform workflow to enable teams to work together on New Relic configuration. One such workflow leverages the CI/CD capabilites of code versioning systems such as GitHub, GitLab and Bitbucket to automatically plan and apply code using built-in approval and review mechanisms.

This diagram illustrates how a change is raised as a PR, which is then approved and merged to main to trigger resource deployment.

Example workflow implementations

The following reference examples demonstrate how to set up a Terraform workflow in a number of different systems:

- GitHub Actions example: this example shows how to use GitHub Actions together with AWS S3 backed state storage.

- GitLab pipeline example: this example shows how to use a GitLab pipeline together with Gitlab http state storage.

- Bitbucket pipeline example: this example demonstrates using a Bitbucket pipeline together with S3 backed state storage.

Version pinning

When provisioning resources automatically via observability-as-code workflows, it's important to ensure the workflow performs the same way on every run. We recommend version pinning the version of the New Relic provider and Terraform that you've used, to ensure that unexpected upgrades don't occur that cause pipeline failure. If you decide to version pin, then you should periodically upgrade to, and test, the latest versions. You can learn more about constraining versions in the Terraform docs.

Detecting configuration drift

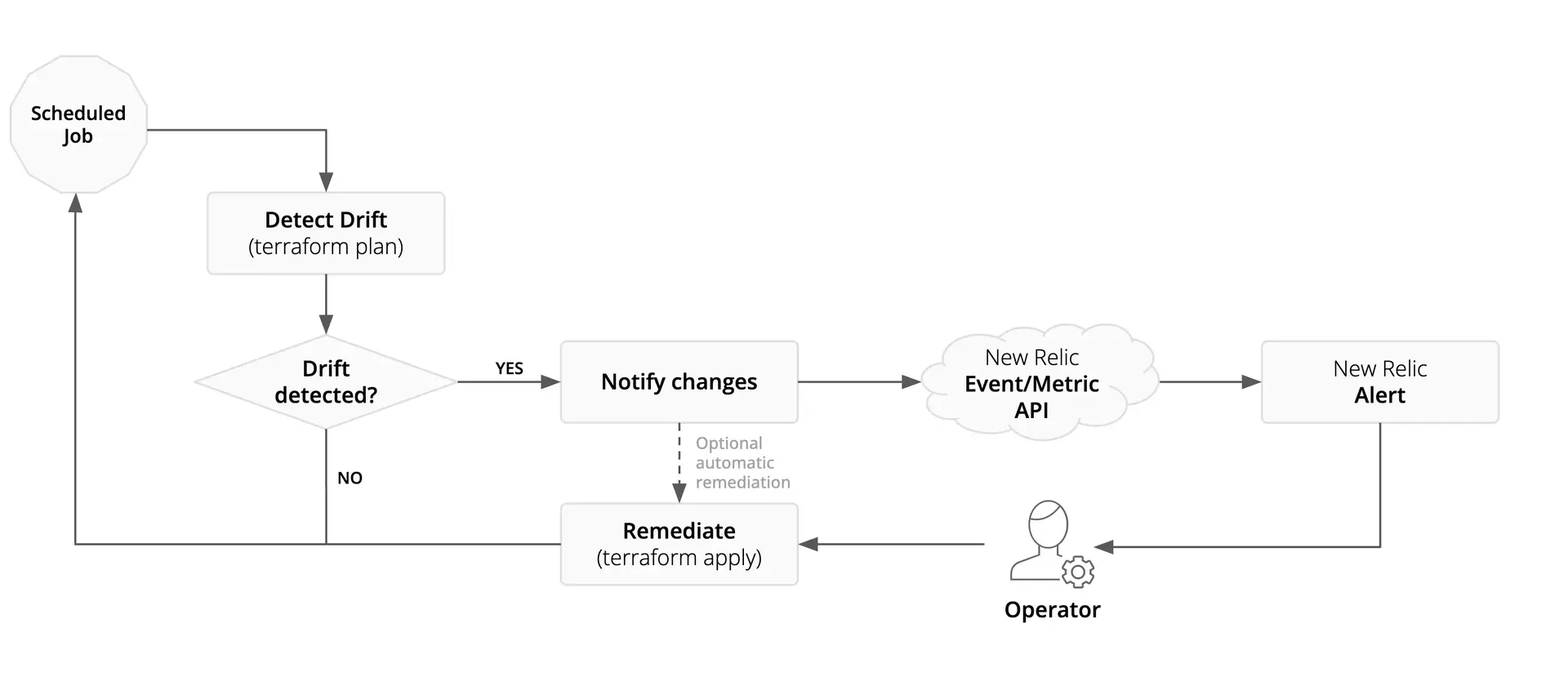

Understanding configuration drift is important to ensure stability and reliability of your observability platform. Depending on your strategy for access control and permissions it may be possible that users can change resources in the UI that are also managed by code. Detecting this configuration drift enables you to understand the changes and fix the configuration if necessary.

There are two main modes of operation:

- Detect and notify: In this mode, drift is detected and operators are notified. However no remedial changes are made automatically.

- Detect, remediate and notify: In this mode, drift is detected and, where possible, also remediated automatically by the workflow.

This diagram illustrates how a configuration drift workflow may be implemented. Detected changes are reported to New Relic where they can be alerted upon and tracked over time.

Configuration drift reference example

This reference example leverages GitHub Actions to schedule regular Terraform plan operations. The number of changes detected is reported to New Relic and the re-apply of the Terraform can optionally be initiated.

Terraform configuration drift example

Conclusion

Best practices to adopt

- Clearly define and implement the KPIs to measure observability-as-code maturity.

- Establish and communicate the current state prior to implementing new observability-as-code capabilities.

- Leverage automation wherever possible to accelerate observability delivery across environments.

- Auto-document assets created via code.

- Track and address configuration drift.

- Drive extended reuse of assets across environments.

Value realization

At the end of this process you should realize the following benefits:

- Easily and effectively communicate your current observability-as-code maturity.

- Reduce the time to observability of your environments.

- Reduce the manual effort required to implement observability and free up productive time.

- Reduce operational risk in your production environments.

- Improve the ability to detect and resolve issues faster.

- Accelerate time to deploy new releases.

- Make telemetry data more actionable for your organization as a whole.

- Improve your service availability and delivery to the business.