The OpenTelemetry APM UI is a curated experience available for service entities. If you haven't configured your service with New Relic and OpenTelemetry, see OpenTelemetry APM monitoring.

The OpenTelemetry APM UI pages are designed to help quickly identify and diagnose problems. Many require data to conform to various OpenTelemetry semantic conventions, but some are general purpose.

The following New Relic concepts recur or have overlap across pages:

Find OpenTelemetry APM services

To find OpenTelemetry APM services, navigate to All entities > Services > OpenTelemetry or APM & Services. Click on a service to navigate to the service's Summary page.

Within the entity explorer, you can filter by entity tags. For details on how entity tags are computed, see OpenTelemetry resources in New Relic.

Page: Summary

The summary page provides an overview of your service's health, including:

- Golden signals: response time, throughput, and error rate. See Golden signals for details on how these are computed.

- Related entities: other services communicating with this service and related infrastructure. See Service map for a detailed view.

- Activity: status of any alerts active for this service.

- Distributed tracing insights: discover whether downstream or upstream entities might be contributing to degraded performance. See Related trace entity signals for more details.

- Instances: breakdown of golden signals by instance when a service is scaled horizontally. Depends on the

service.instance.idresource attribute (see Services for more details).

Page: Distributed tracing

The distributed tracing page provides detailed insights into OpenTelemetry trace data. See Distributed tracing for page usage information. See OpenTelemetry traces in New Relic for details on how OpenTelemetry trace data is ingested into New Relic.

As with golden signals, spans are classified as errors if the span status is set to ERROR (for example, otel.status_code = ERROR). If the span is an error, the span status description (for example, otel.status_description) is displayed in error details.

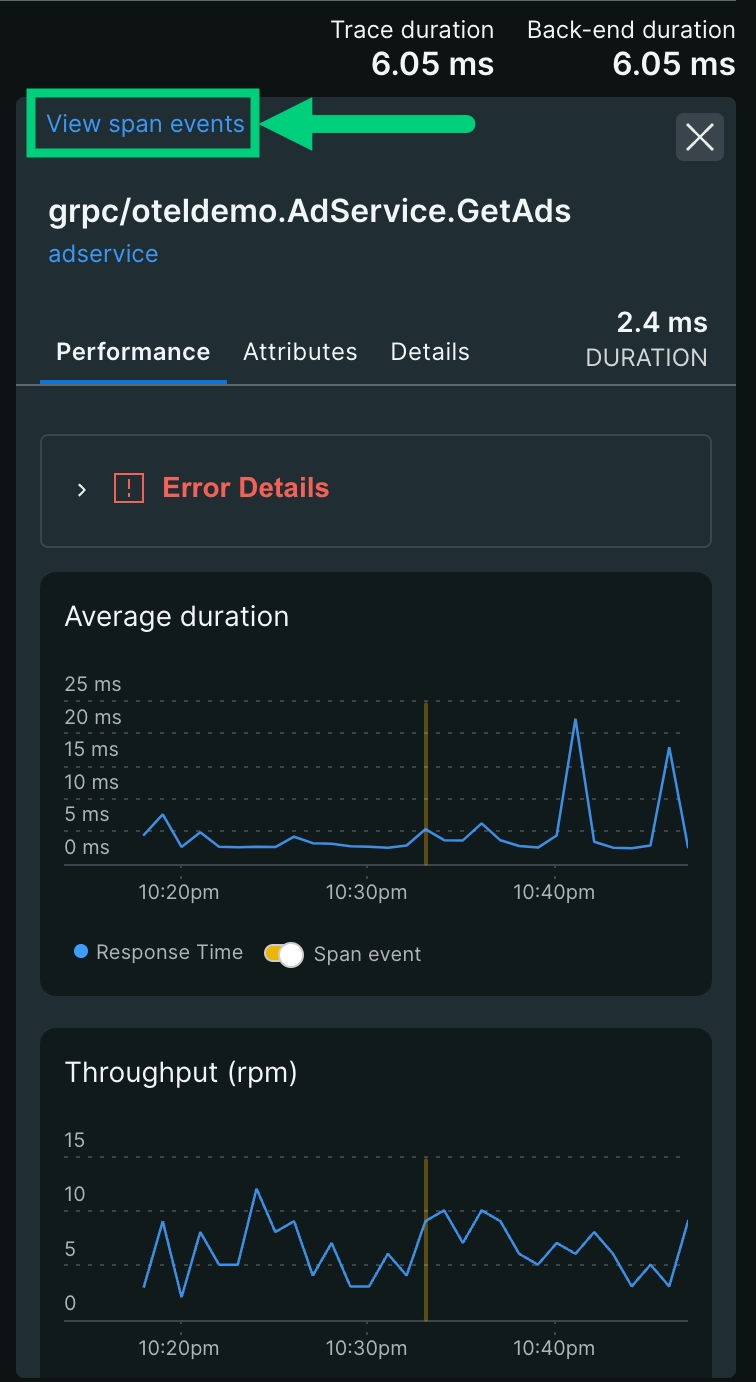

OpenTelemetry span events attach additional event context information to a particular span. They are most commonly used to capture exception information. If available, you can view a span's events in trace details.

Tip

The presence of a span exception event doesn't qualify the span as an error on its own. Only spans with span status set to ERROR are classified as errors.

Page: Service map

The service map page provides a visual representation of your entire architecture. See Service maps for more information.

Page: Transactions

The transactions page provides tools for identifying problems with and analyzing a service's transactions.

For metrics, the queries assume data conforms to the HTTP metric or RPC metric semantic conventions. The http.route and rpc.method attributes are used for listing and filtering by transaction.

For spans, the queries are generic, utilizing only the top level span data model. Spans are counted towards transaction throughput and response time as described in Golden signals. The span name field is used for listing and filtering by transaction.

Page: Databases

The databases page provides tools for identifying problems with and analyzing a service's database client operations.

There is no metrics-based view of databases since there are currently no semantic conventions available.

For spans, the queries assume data conforms to the DB span semantic conventions. The span name, and db.system, db.sql.table, and db.operation attributes are used for listing and filtering database operations.

Page: External services

The externals page provides tools for identifying problems with and analyzing a service's external calls, including calling entities (upstream services) and called entities (downstream services).

There is no metrics-based view of the external services page.

For spans, the queries are generic, utilizing only the top level span data model. Spans are counted towards external service throughput and response time if they are exiting a service, computed using a heuristic of WHERE span.kind = client OR span.kind = producer. Database client spans are filtered out using WHERE db.system is null (see Page: Databases). As with golden signals, spans are errors if they have a status code of ERROR (for example, otel.status_code = ERROR). If available, data from the HTTP span and RPC span semantic conventions are used to classify external service calls.

Page: JVM runtime

The JVM runtime page provides tools for identifying problems with and analyzing a Java service's JVM. The page is only displayed for services using OpenTelemetry java. In order to differentiate between distinct service instances, the page requires the service.instance.id resource attribute to be set (see Services for more details).

The JVM runtime page shows golden signals alongside JVM runtime metrics to correlate runtime issues with service usage. The metrics or spans toggle dictates whether the golden signals are driven by span or metric data. There is no spans-based view for JVM runtime metrics.

For metrics, the queries assume data conforms to the JVM metric semantic conventions. Note, these conventions are embodied in OpenTelemetry Java runtime instrumentation library, which is automatically included with the OpenTelemetry java agent.

Page: Go runtime

The Go runtime page provides tools for identifying problems with and analyzing a Go service's runtime. The page is only displayed for services using OpenTelemetry Go. In order to differentiate between distinct service instances, the page requires the service.instance.id resource attribute to be set (see Services for more details).

The Go runtime page shows golden signals alongside Go runtime metrics to correlate runtime issues with service usage. The metrics or spans toggle dictates whether the golden signals are driven by span or metric data. There is no spans-based view for Go runtime metrics.

For metrics, the queries assume data is produced by the OpenTelemetry Go runtime instrumentation library. Note, there are currently no semantic conventions for Go runtime metrics.

Page: Logs

The Logs page provides tools for identifying problems and analyzing a service's logs. See Use logs UI for more information.

Page: Errors inbox

The errors inbox page provides tools for detecting and triaging a service's errors. See Getting started with errors inbox for more details.

The errors inbox page is driven by trace deta. As with golden signals, spans are classified as errors if the span status is set to ERROR (for example, otel.status_code = ERROR).

Error spans are grouped together by their error fingerprint, computed by normalizing away identifying values such as UUIDs, hex values, email addresses, etc. Each distinct error span is an individual instance within the error group. The error group message is determined as follows:

- Span status description (for example,

otel.status_description) rpc.grpc.status_codefrom RPC span semantic conventionshttp.status_codefrom HTTP span semantic conventionshttp.response.status_codefrom HTTP span semantic conventionsundefinedif none of the above are present

Page: Metrics explorer

The Metrics explorer provides tools for exploring a service's metrics in a generic manner. See Explore your data for more information.

Golden signals

The golden signals of throughput, response time, and error rate appear in several places throughout the OpenTelemetry APM UI. When used, they are computed as follows:

For metrics, the queries assume data conforms to the HTTP metric or RPC metric semantic conventions.

For spans, the queries are generic, utilizing only the top level span data model. Spans are counted towards throughput and response time if they are root entry spans into a service, computed using a heuristic of WHERE span.kind = server OR span.kind = consumer. Spans are errors if they have a status code of ERROR (for example, otel.status_code = ERROR).

Narrow data with filters

Several pages include a filter bar, with options like Narrow data to.... This allows you to filter queries on the page to match the criteria. For example, you might narrow to a particular canary deployment by filtering for service.version='1.2.3-canary'. Filters are preserved when navigating between pages.

Metrics or spans toggle

Various pages include a metrics or spans toggle. This allows you to choose whether queries are driven by span or metric data based on analysis requirements and data availability.

Metrics are not subject to sampling, and are thus more accurate, especially when computing rates like throughput. However, metrics are subject to cardinality constraints, and may lack certain attributes important for analysis. In contrast, spans are sampled and thus subject to accuracy issues, but have richer attributes since they are not subject to cardinality constraints.

Historically, OpenTelemetry language APIs and SDKs and instrumentation prioritized trace instrumentation. However, the project has come a long way and metrics are available in almost all languages. Check the documentation of the relevant language and instrumentation for more details.

Golden metrics

Golden metrics are low-cardinality versions of golden signals data, such as HTTP/RPC metrics. They populate various platform experiences, including the Entity Explorer, Workloads Activity page, and Change Tracking Details page. These metrics use names like newrelic.goldenmetrics.ext.service.*.

Important

Historically, the OpenTelemetry golden metrics were calculated from spans. Spans are usually sampled, so they only provide a partial picture. Now that metrics are broadly available, golden metrics are calculated using metrics data rather than span data.