If you're a developer running applications in Kubernetes, you can use New Relic to understand how the Kubernetes infrastructure affects your OpenTelemetry instrumented applications.

After completing the steps below, you can use the New Relic UI to correlate application-level metrics from OpenTelemetry with Kubernetes infrastructure metrics. This allows you to see the entire landscape of your telemetry data, and collaborate across teams to achieve faster mean time to resolution (MTTR) for issues in your Kubernetes environment.

How we correlate the data

This document walks you through enabling your application to inject infrastructure-specific metadata into the telemetry data. The result is that the New Relic UI is populated with actionable information. Here are the steps you'll take to get started:

In each application container, define an environment variable to send telemetry data to the Collector.

Deploy the OpenTelemetry Collector as a

DaemonSetin agent mode withresourcedetection,resource,batch, andk8sattributesprocessors to inject relevant metadata (cluster, deployment, and namespace names).

Before you begin

To successfully complete the steps below, you should already be familiar with OpenTelemetry and Kubernetes, and have done the following:

Created these environment variables:

OTEL_EXPORTER_OTLP_ENDPOINT: See New Relic OTLP endpoint for more info.NEW_RELIC_API_KEY: See New Relic API keys for more info.

Installed your Kubernetes cluster with OpenTelemetry.

Instrumented your applications with OpenTelemetry, and successfully sent data to New Relic via OpenTelemetry Protocol (OTLP).

If you have general questions about using Collectors with New Relic, see our Introduction to OpenTelemetry Collector with New Relic.

Configure your application to send telemetry data to the OpenTelemetry Collector

To set this up, you need to add a custom snippet to the env section of your Kubernetes YAML file. The example below shows the snippet for a sample frontend microservice (Frontend.yaml). The snippet includes 2 sections that do the following:

Section 1: Ensure that the telemetry data is sent to the Collector. This sets the environment variable

OTEL_EXPORTER_OTLP_ENDPOINTwith the host IP. It does this by calling the downward API to pull the host IP.Section 2: Attach infrastructure-specific metadata. To do this, we capture

metadata.uidusing the downward API and add it to theOTEL_RESOURCE_ATTRIBUTESenvironment variable. This environment variable is used by the OpenTelemetry Collector'sresourcedetectionandk8sattributesprocessors to add additional infrastructure-specific context to telemetry data.

For each microservice instrumented with OpenTelemetry, add the highlighted lines below to your manifest's env section:

# Frontend.yamlapiVersion: apps/v1kind: Deployment

# ...spec: containers: - name: yourfrontendservice image: yourfrontendservice-beta env: # Section 1: Ensure that telemetry data is sent to the collector - name: HOST_IP valueFrom: fieldRef: fieldPath: status.hostIP # This is picked up by the opentelemetry sdks - name: OTEL_EXPORTER_OTLP_ENDPOINT value: "http://$(HOST_IP):55680" # Section 2: Attach infrastructure-specific metadata # Get pod ip so that k8sattributes can tag resources - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_UID valueFrom: fieldRef: fieldPath: metadata.uid # This is picked up by the resource detector - name: OTEL_RESOURCE_ATTRIBUTES value: "service.instance.id=$(POD_NAME),k8s.pod.uid=$(POD_UID)"Configure and deploy the OpenTelemetry Collector

We recommend you deploy the Collector as an agent on every node within a Kubernetes cluster. The agent can receive telemetry data, and enrich telemetry data with metadata. For example, the Collector can add custom attributes or infrastructure information through processors, as well as handle batching, retry, compression and additional advanced features that are handled less efficiently at the client instrumentation level.

You can choose one of these options to monitor your cluster:

(Recommended) Install your Kubernetes cluster using OpenTelemetry: This option automatically deploys the Collector as an agent. Everything will work right out of the box, you'll have the Kubernetes metadata in the APM telemetry and the Kubernetes UIs.

Manual configuration and deployment: If you prefer to configure it manually, follow these steps:

Configure the OTLP exporter

Add an OTLP exporter to your OpenTelemetry Collector configuration YAML file along with your New Relic as a header.

exporters:otlp:endpoint: $OTEL_EXPORTER_OTLP_ENDPOINTheaders: api-key: $NEW_RELIC_API_KEYConfigure the batch processor

The batch processor accepts spans, metrics, or logs and places them in batches. This makes it easier to compress data and reduce outbound requests from the Collector.

processors:batch:Configure the resource detection processor

The

resourcedetectionprocessor gets host-specific information to add additional context to the telemetry data being processed through the Collector. In this example, we use Google Kubernetes Engine (GKE) and Google Compute Engine (GCE) to get Google Cloud-specific metadata, including:cloud.provider("gcp")cloud.platform("gcp_compute_engine")cloud.account.idcloud.regioncloud.availability_zonehost.idhost.image.idhost.typeprocessors:resourcedetection:detectors: [gke, gce]

Configure the Kubernetes attributes processor (general)

When we run the

k8sattributesprocessor as part of the OpenTelemetry Collector running as an agent, it detects the IP addresses of pods sending telemetry data to the OpenTelemetry Collector agent, using them to extract pod metadata. Below is a basic Kubernetes manifest example with only a processors section. To deploy the OpenTelemetry Collector as aDaemonSet, read this comprehensive manifest example.processors:k8sattributes:auth_type: "serviceAccount"passthrough: falsefilter:node_from_env_var: KUBE_NODE_NAMEextract:metadata:- k8s.pod.name- k8s.pod.uid- k8s.deployment.name- k8s.cluster.name- k8s.namespace.name- k8s.node.name- k8s.pod.start_timepod_association:- from: resource_attributename: k8s.pod.uidConfigure the Kubernetes attributes processor (RBAC)

You need to add configurations for role-based access control (RBAC). The

k8sattributesprocessor needsget,watch, andlistpermissions for pods and namespaces resources included in the configured filters. This example shows how to configure role-based access control (RBAC) forClusterRoleto give aServiceAccountthe necessary permissions for all pods and namespaces in the cluster.Configure the Kubernetes attributes processor (discovery filter)

When running the Collector as an agent, you should apply a discovery filter so that the processor only discovers pods from the same host that it's running on. If you don't use a filter, resource usage can be unnecessarily high, especially on very large clusters. Once the filter is applied, each processor will only query the Kubernetes API for pods running on its own node.

To set the filter, use the downward API to inject the node name as an environment variable in the pod

envsection of the OpenTelemetry Collector agent configuration YAML file. For an example, see theotel-collector-config.ymlfile on GitHub. This will inject a new environment variable to the OpenTelemetry Collector agent's container. The value will be the name of the node the pod was scheduled to run on.spec:containers:- env:- name: KUBE_NODE_NAMEvalueFrom:fieldRef:apiVersion: v1fieldPath: spec.nodeNameThen, you can filter by the node with the

k8sattributes:k8sattributes:filter:node_from_env_var: KUBE_NODE_NAME

Validate that your configurations are working

You should be able to verify that your configurations are working once you have successfully linked your OpenTelemetry data with your Kubernetes data.

Caution

The Kubernetes summary page only displays data from applications monitored by New Relic agents or OpenTelemetry. If your environment uses a mix of different instrumentation providers, you may not see complete data on this page.

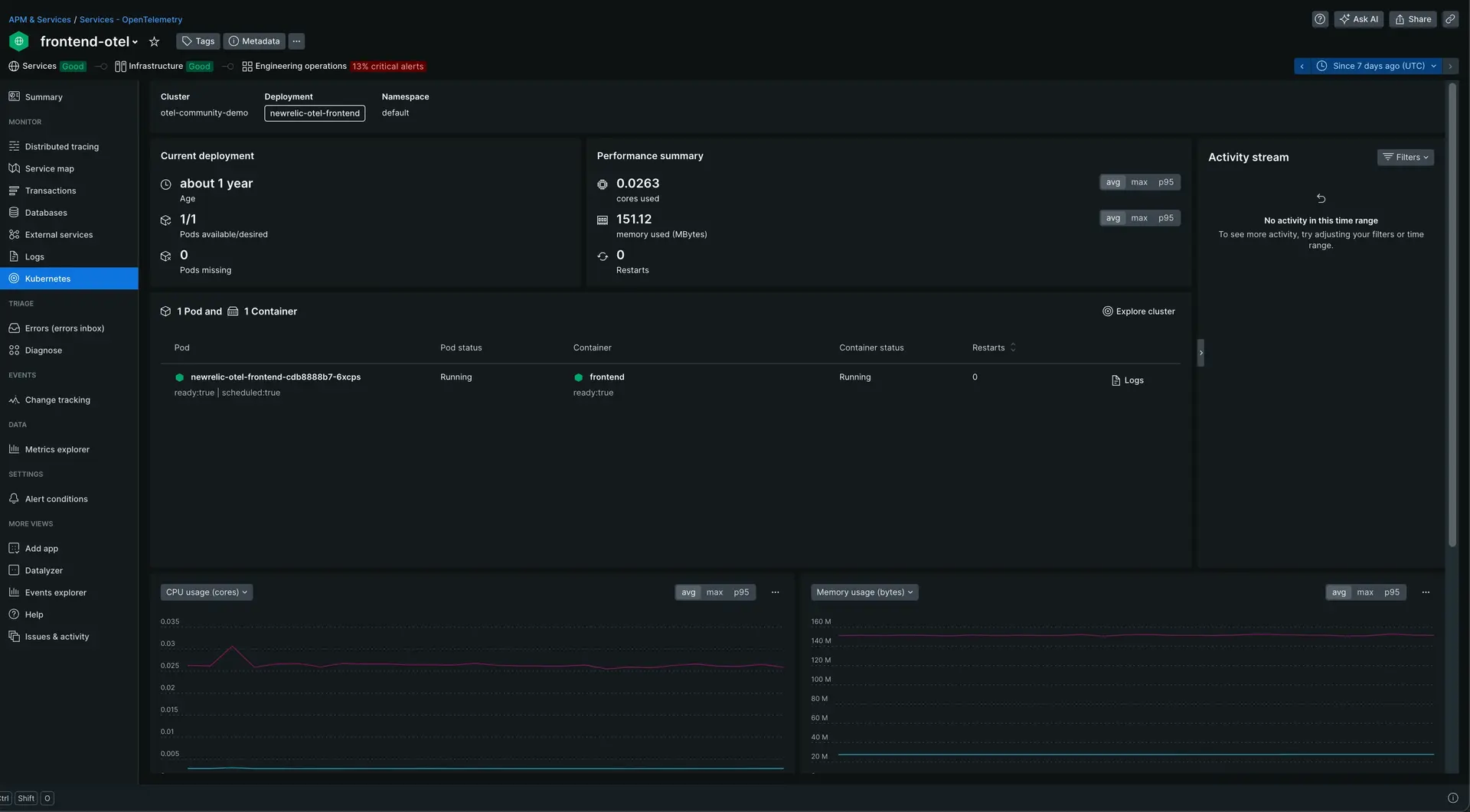

Go to one.newrelic.com > All capabilities > APM & Services and select your application inside Services - OpenTelemetry.

Click Kubernetes on the left navigation pane.

Go to one.newrelic.com > All capabilities > APM & Services > (selected app) > Kubernetes to see the Kubernetes summary page.