Install the Kubernetes integration

New Relic's Kubernetes integration gives you full observability into the health and performance of your infrastructure. With this agent you can collect telemetry data from your cluster using several New Relic integrations such as the Kubernetes events integration, the Prometheus agent, the nri-kubernetes, and the New Relic Logs Kubernetes plugin.

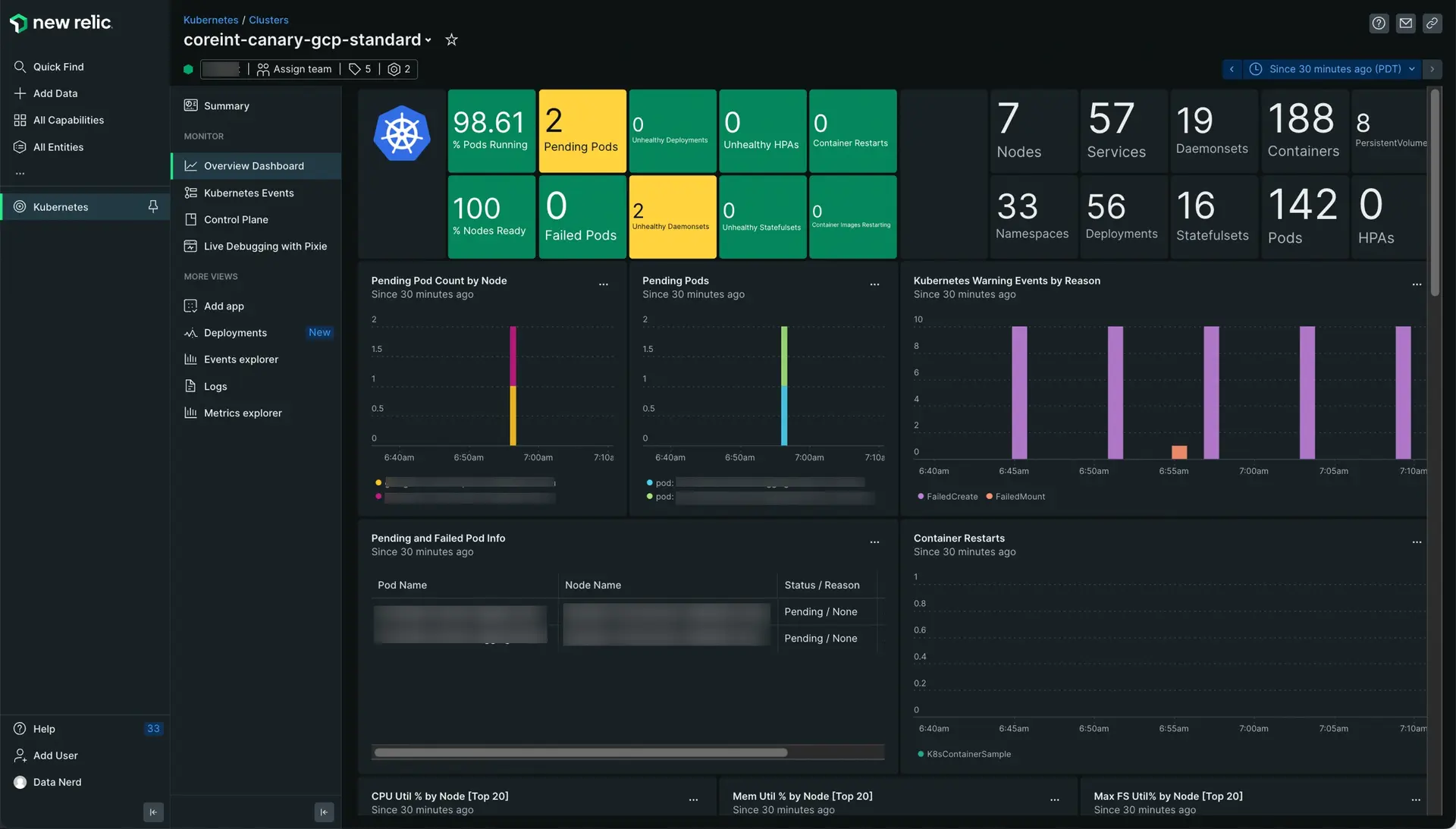

Go to one.newrelic.com > All capabilities > Kubernetes. Select your cluster and click Overview Dashboard in the left navigation pane. See Explore your Kubernetes cluster for more info.

Important

If you're going to install the New Relic Kubernetes integration EKS Fargate, see the Install Kubernetes on AWS EKS Fargate document.

Follow these steps to install the New Relic Kubernetes integration.

Choose your next step

Configure control plane monitoring

Learn how to monitor and collect metrics from your cluster's control plane components.

Explore your Kubernetes cluster

Learn how to interpret the data displayed in the different Kubernetes curated UIs, viewing the status of your cluster, identifying entities, accessing the cluster dashboard, and searching for Kubernetes events, and more

Find and use your Kubernetes data

See how to use queries, charts, dashboards, alerts, and more.

Kubernetes plugin for log forwarding

Learn how to collect, process, explore, query, and alert on your log data. Our Kubernetes plugin simplifies sending logs from your cluster to New Relic logs.