The streaming platform checks for alert events based on data that's present or missing in your stream of data, or signal, coming into New Relic.

You can use NRQL conditions to control what part of the signal you want to be notified about. Your NRQL condition filters the data that's processed by the streaming algorithm.

There are three methods for aggregating the data filtered through your NRQL condition:

- Event flow (default)

- Event timer

- Cadence

This short video describes the three aggregation method (5:31).

Why it matters

Understanding how streaming alerts works will help you fine-tune your NRQL conditions to be notified about what's important to you.

Only data that matches the conditions of the NRQL WHERE clause is alerted on. For more details on each step of the process, see Streaming alerts process and descriptions.

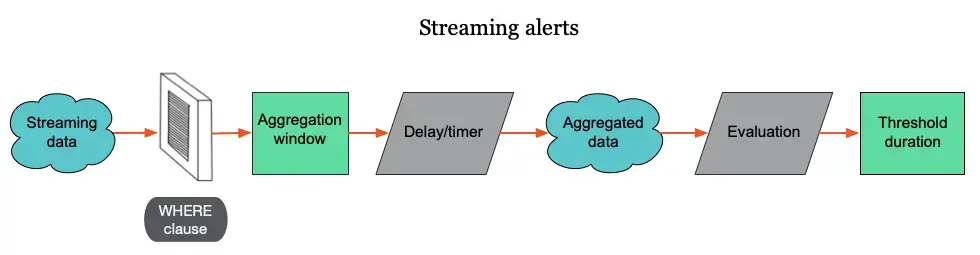

As data streams into New Relic, it's filtered by the NRQL condition. Before data is evaluated, it must meet the criteria defined by the NRQL query's WHERE clause. Instead of evaluating that data immediately for alert events, the NRQL alert conditions collect the data over a period of time known as the aggregation window. An additional delay/timer allows for slower data points to arrive before the window is aggregated.

Once the delay/timer time has elapsed, New Relic aggregates the data into a single data point. Alerts then compares the data point to the condition's threshold criteria to determine whether an alert event should be opened.

Even if a data point meets the criteria for an alert event, an alert event may not be opened. An alert event is only opened when data points consistently meet the threshold criteria over a period of time. This is the threshold duration. If the data points exceed for an entire threshold duration, we'll send you a notification based on your policy settings.

All of these configurable delays give you more control over how you're alerted on sporadic and missing data.

Streaming alerts process and descriptions

Process | Description |

|---|---|

Streaming data | All data coming into New Relic. |

WHERE clause | Filters all incoming streaming data. We only monitor for alerts on data that makes it through this filter. |

Aggregation methods | One of three methods that control how data is collected before it's evaluated. They are:

|

Aggregation window | Data with timestamps that fall within this window will be aggregated and then evaluated. |

Sliding windows | When enabled, it causes aggregation windows to overlap, creating smoother charts. Use the sliding windows duration to set the amount of time your aggregation windows overlap. ImportantCustomers on Advanced and Core Compute pricing plans may incur additional CCU charges when utilizing sliding window aggregation. While this method enhances data analysis by smoothing out fluctuations, its use may lead to increased costs over other methods. For details, refer the pricing section for sliding windows. To determine whether you are on Advanced or Core Compute pricing plans, refer to your Order. |

Delay/timer | A time delay to ensure all data points have arrived in the aggregation window before aggregation occurs. |

Aggregated data | Data in the aggregated window is collapsed to a single data point for alert evaluation. |

Evaluation | The data point is evaluated by the NRQL condition, which is trigged by each incoming aggregated data point. |

Threshold duration | A specific duration that determines if an alert event is created. If your specified NRQL condition meets the threshold criteria over the threshold duration, an alert event occurs. When a data point lacks data, a custom value is inserted to fill the gap. |

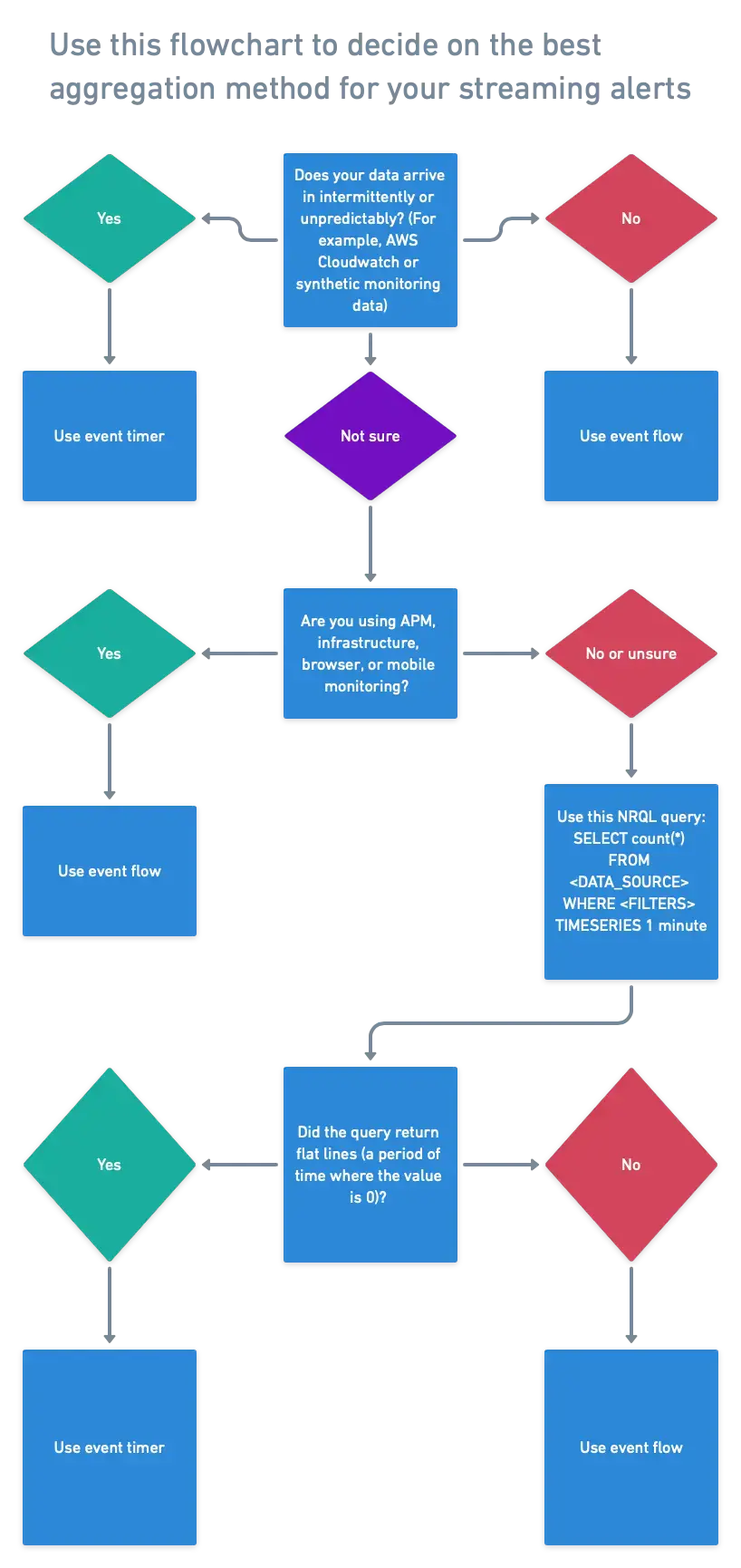

Choose your aggregation method

You can choose between three different aggregation methods, depending on your needs.

Event flow (default) works best for data that comes in frequently and mostly in order.

Event timer works best for data that arrives infrequently in batches, such as cloud integration data or infrequent error logs.

Cadence is our original and inferior aggregation method. It aggregates data on specific time intervals as detected by New Relic's internal wall clock, regardless of data timestamps.

Here's a short video (5:35 minutes) explaining aggregation methods:

Event flow

Event flow aggregates a window of data when the first data point arrives in a subsequent window. The custom delay defines which subsequent window data will start to populate to trigger aggregation of the current window. A custom delay provides extra time for data to arrive. These times are based on the data's timestamps and not New Relic's wall clock time.

For example, suppose you're monitoring CPU usage in window durations of 1 minute and a 3 minute delay.

When a CPU usage data point comes in with a timestamp between 12:00pm and 12:01pm, event flow will not aggregate that window until a data point shows up with a timestamp between 12:04pm and 12:05pm. When event flow receives the first data point with a timestamp of 12:04pm or later, it sends the 12:00 to 12:01 data to be aggregated.

Caution

If you expect your data points to arrive more than 65 minutes apart, please use the Event Timer method described below.

Event timer

Like event flow, event timer will only aggregate data for a given window when data arrives for that window. When a data point arrives for an aggregation window, a timer dedicated to that window starts to count down. If no further data arrives before the timer counts down, the data for that window is aggregated. If more data points arrive before the timer has completed counting down, the timer is reset.

For example, suppose you're monitoring CloudWatch data that arrives fairly infrequently. You're using a window duration of 1 minute and a 3 minute timer.

When a CloudWatch data point comes in with a timestamp between 12:00pm and 12:01pm, the timer will start to count down. If no further data points show up for that 12:00-12:01 window, the window will get aggregated 3 minutes later.

If a new data point with a timestamp between 12:00 and 12:01 arrives, the timer resets. It keeps resetting every time more data points for that window arrive. The window will not be sent for aggregation until the timer reaches 0.

If the timer for a later data point elapses before an earlier data point, the event timer method waits for the earlier timer to elapse before aggregating the later data point.

For best results, make sure your timer is equal to or longer than your window duration time. If the timer is less than your window duration and your data flow is inconsistent, then your data may be evaluated before all of your data points arrive. This could cause you to be notified incorrectly.

Cadence

We recommend you use one of the other two methods.

Cadence is our old streaming aggregation method. This method uses New Relic's wall clock time to determine when data is aggregated and evaluated. It doesn't take into account data point timestamps as they arrive.

Streaming alerts tools

Streaming alerts provide a set of tools to give you greater control over how your data is aggregated before it's evaluated to reduce incorrect notifications you receive. They are:

- Window duration

- Delay/timer

- Loss of signal detection

- Gap filling

Tip

This article covers these tools at a conceptual level. You'll find direct instructions on how to use these tools in Create NRQL alert conditions.

Window duration

In order to make loss of signal detection more effective and to reduce unnecessary notifications, you can customize aggregation windows to the duration that you need.

An aggregation window is a specific block of time. We gather data points together in an aggregation window, before evaluating the data. A longer aggregation window can smooth out the data, since an outlier data point will have more data points to be aggregated with, giving it less of an influence on the aggregated data point that is sent for evaluation. When a data point arrives, its timestamp is used to put it in the proper aggregation window.

You can set your aggregation window to anything between 30 seconds and 6 hours. The default is 1 minute.

Delay/timer

The delay/timer setting controls how long the condition should wait before aggregating the data in the aggregation window.

The event flow and cadence methods use delay. Event timer uses timer.

The delay default is 2 minutes. The timer default is 1 minute, has a minimum value of 5 seconds, and a maximum value of 20 minutes.

Loss of signal detection

Loss of signal occurs when no data matches the NRQL condition over a specific period of time. A loss of signal is caused by different things. The WHERE clause in your NRQL query can filter out data before it's evaluated for alert events. It could also mean a service or entity is offline or a periodic job has failed to run and no data is being sent to New Relic.

In order to avoid unnecessary notifications, you can choose how long to wait before you're notified by a loss of signal alert event. You can use loss of signal detection to open alert events and be notified when a signal is lost. Alternately, you can use a loss of signal to close alert events for ephemeral services or sporadic data, such as error counts.

Gap filling

Gap filling lets you customize the values to use when your signals don't have any data. You can fill gaps in your data streams with the last value received, a static value, or else do nothing and leave the gap there. The default is None.

Gaps in streaming data can be caused by network or host issues, a signal may be sparse, or some signals, such as error counts, may only have data when something is wrong. By filling the gaps with known values, the alert evaluation process can process those gaps and determine how they should affect the loss of signal evaluation.

Tip

The alerts system fills gaps in actively reported signals. This signal history is dropped after 2 hours of inactivity. For gap filling, data points received after this period of inactivity are treated as new signals.

To learn more about signal loss and gap filling, see this Support Forum post.